Congratulations to

The Internet of the Future

Image: www.istock.com/ bluecinema

Image: www.istock.com/ bluecinema

Data streams are exploding the world over. Managing this data surge is one of the biggest challenges of the digital age. Three communications engineers at the Technical University of Munich (TUM) developed an algorithm to do just this by pushing transmission rates close to their theoretical limits.

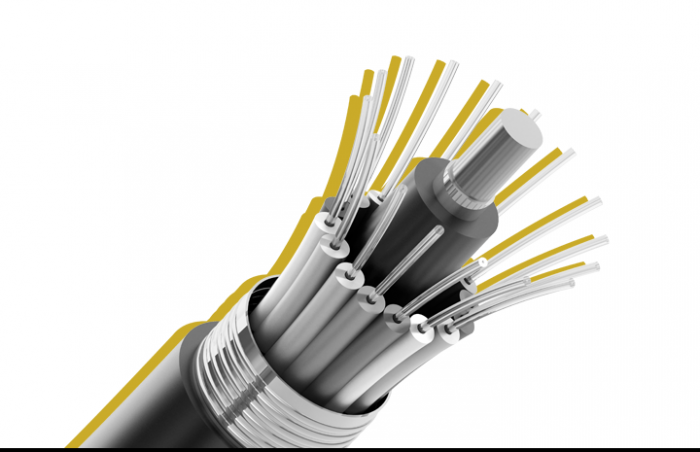

Three communications engineers at TUM set a world record in 2016 by transmitting one terabit of data per second through the a limited frequency band of a single, wafer-thin optical fiber of a fiber cable . They thus created an Internet connection capable of streaming tens of thousands of HD films simultaneously. It is almost the highest speed possible with this kind of fiber optic channel. This ground-breaking technology was developed by the three TUM researchers Georg Böcherer, Fabian Steiner and Patrick Schulte in collaboration with the renowned industry research company Bell Labs.

The key to their success involves going back to basics – to the mathematical foundations of information theory – to program a simple algorithm that works on the basis of probability. The algorithm is called RateX. It dynamically adapts signals to the transmission conditions of each channel, regardless of whether they are long-haul, transatlantic cables stretching thousands of kilometers or short-range wireless links between industrial robots in the Internet of Things.

“It is a universal architecture for all communication devices,” explains Professor Gerhard Kramer, Head of the Institute of Communications Engineering, where the three engineers carry out their research. The first chips with this algorithm are already being launched on the market, promising massive speed gains for the Internet – accelerating, for example, the latest 5G mobile communications, cloud services and video streaming. In just over ten years, Kramer expects RateX to become the standard method in billions of devices.

Every communication channel – whether fiber, air or copper – has certain characteristics that limit transmission rates. This is known as Shannon’s limit after the information theorist Claude Shannon. In practice, it is almost impossible to reach the theoreticalthis limit. However, the algorithm developed at the TUM comes close to it. It modulates signals based on probability calculations. So it only transmits optical signals that require the least amplitude over a fiber cable. The concept is known as “Probabilistic Constellation Shaping” (PCS). The algorithm also codes the data in such a way that the chip on the receiving end can recover the data with almost no losses. To do this, it transforms bits into sequences of symbols with a specific distribution. This mapping is fully reversible, allowing data to be transmitted faster and more reliably over longer distances.

The three inventors from TUM have made a conscious decisiondecided not to protect their record-breaking algorithm with a patent. Instead, they have made it publicly available. Just a few months after their sensational trial, the first Internet corporations were already testing this technology and more than doubling the performance of their transatlantic cables. This marks the first step towards a new global standard capable of managing spiraling data volumes. The majority of global data traffic is transmitted by fiber optic cables at the bottom of the world’s oceans. By the year 2021, the volume of data is expected to rise to 3.3 zettabytes, which is 127 times more than in 2005. Until now, the operators of these cables have been using proprietary – in other words secret – standards to compete against each other for the fastest speeds.

The benchmark record breaking algorithm originating from TUM shows that the researchscience community can make the Internet fit for the future by building technical solutions on the theoretical principles of communication technology. The three developers of RateX used theoretical predictions to develop a technology that works in the “real” world. Even the world record experiment took place under real-world conditions in the Deutsche Telekom network. Taking a step back to focus on the theory might sound unusual but Professor of Communications Engineering Kramer has already identified a host of other applications for this principle. These include data security, network reliability and the architecture of new, hyper-scale server centers that will be required to store and process the torrent of data. “There are still many more research opportunities here,” concludes Kramer.

“Mathematical principles provided blueprints for our algorithm. We then tested them in real life. As long as our models are correct, they will also work in the real world. Recently in particular, we’ve had a lot of success with fiber optic transmissions.”

Gerhard Kramer, 2017, Alexander von Humboldt Professor at the Chair of Communications Engineering at the TUM

Bild: Astrid Eckert & Andreas Heddergott / TUM